Photo by hisperati

About 8 months ago I acquired a small startup called HitTail. You can read more about the acquisition here.

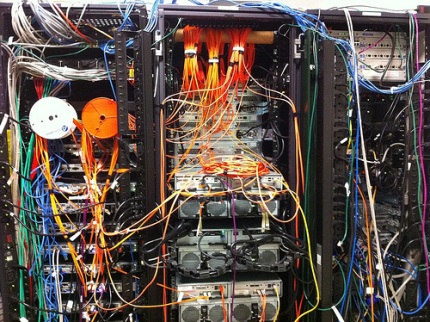

When the deal closed, the app was in bad shape. Within 3 weeks I had to move the entire operation, including a large database, to new servers. This required my first all-nighter in a while. Here is an excerpt of an email I sent to a friend the morning of September 16, 2011 at 6:47 am:

Subject: My First All Nighter in Years

Wow, am I tired. Worst part is my kids are going to be up in the next half hour. This is going to hurt 🙂

But HitTail is on a new server and it seems to be running really well. Feels great to have it within my control. There are still a couple pieces left on the old server, but they are less important and I’ll have them moved within a week.

I’ll write again in a few hours with the whole story. It’s insane how many things went wrong.

What follows is the tale of that long night…

The Setup

I acquired HitTail in late August and it was in bad shape. I had 3 priorities that I wanted to hit hard and fast, in the following order:

- Stability – Before I acquired it the site went down every few months, sometimes for several days on end. To stabilize it I needed to move it to new servers, fix a few major bugs, and move 250GB of data (1.2 billion rows).

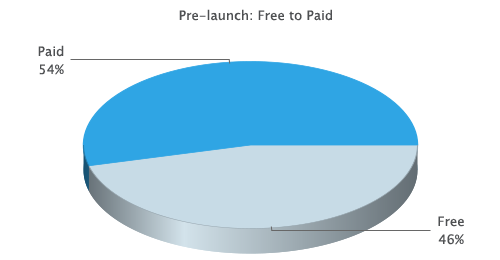

- Plug the Funnel – Conversion rates were terrible; I needed to look at each step of the process and figure out how to improve the percentage of people making it to the next step.

- Spread the Word – Market it.

The first order of business was to move to new servers, which involved overnighting 250GB of data on a USB drive to the new hosting facility, restoring from backup, setting up database replication until the databases were in synch, taking everything offline for 2-3 hours to merge two servers into one, test everything, and flip the switch.

If only it were that simple.

Thursday Morning, 10am: An Untimely Crash

We had planned to take the old servers offline at 10pm pacific on Thursday, but around 10am one of them went down. The server itself was working, but the hard drive was failing and it wouldn’t server web requests.

I received a few emails from customers asking why the server was down and I was able to explain that this should be the last downtime for many months. Everyone was appreciative and supportive. But I spent most of the day trying to get the server stay alive for 12 more hours, with no luck. Half of the users had no access to it for the final 12 hours on the old servers.

Thursday Afternoon, 2pm: Where’s the Data?

We had meticulously planned to have all the data replicated between the old and new database to ensure they would be in synch when we went to perform the migration. But there was one problem…

Replication had silently failed about 48 hours beforehand, and neither myself or my DBA noticed. So at 2pm we realized we were literally gigs behind with the synchronization. With only 8 hours until the migration window began, we zipped up these gigs and started copying them from server to server. The dialog said it would take 5.5 hours – no problem! We had 2.5 hours of leeway.

Thursday Night, 8pm: Copy and Paste

My DBA checked on the copying a few times in the middle of his night (he’s in the UK), and it eventually failed with 10% remaining. At that point we knew that even if we got everything done according to plan we wouldn’t have data for the most recent 48 hours. Grrr.

In a last ditch effort to get the data across the wire we selected the info from a single table that changes the most and copied it to the new server, which took only around 5 minutes. The problem was that this data should have been processed by our fancy keyword suggestion algorithm and it hadn’t been.

Friday Morning, 1am: Panic Sets In

It was about this time I began to panic. Trying to process the data using existing code was not going to happen – the algorithm is written in a mix of JavaScript and Classic ASP, which couldn’t be executed in the same fashion that it is when the app runs under normal conditions. And it wouldn’t run fast enough to process the millions of rows that needed it in any realistic amount of time.

So I did one of the craziest things I’ve done in a while. I spent 4 hours, from approximately 11pm until 3am, writing a Windows Forms app that combined the JavaScript and Classic ASP into a single assembly – with the JavaScript being compiled directly into a .NET DLL that I referenced from C# code. Then I translated the Classic ASP (VBScript) into C# and prayed it would work.

And after 2 hours of coding and 2 hours of execution, it worked.

Friday Morning, 3am: It Gets Worse…

During this time the DBA was trying to merge two databases – copying 75 million rows of data from one DB to another. This was supposed to take 3-5 hour based on tests the DBA had run.

But it was taking 20x longer. The disks were insanely slow because we were running during their backup window and some of the data was on a shared SAN. By 3am, when we should have been wrapping up the entire process, we were 10% done with the 75 million rows.

By 6am we had to make a call. It was already 9am on the East Coast and customers would surely be logging in soon if they hadn’t already. I was exhausted and at my wits end with the number of unexpected failures, and I asked my DBA what our options were.

After some discussion we decided to re-enable the application so users could access it, but continue copying the 75 million rows, but with a forced sleep so the app could run with surprisingly little performance impact. It took 4 days for all of the data to copy, but we didn’t receive a single complaint in the meantime.

Lucky for us, no one noticed. And the app running on the new hardware was 2-3x times more responsive than on the old setup.

Friday Morning, 6:45am: Victory

After some final testing I logged off at 6:45am Friday morning with a huge sigh of relief. I dashed off the email you read in the intro, then headed to bed to be awoken by my kids an hour later. Needless to say had a big glass of wine the following night.

Building a startup isn’t all the fun you read about on Hacker News and TechCrunch…sometimes it’s even better.